What Is Good Code in the AI Era?

Every abstraction shift in software engineering sparks anxiety. With AI/Agentic code generation, what changes isn't whether engineers matter, but what makes a good engineer.

January 16, 2026

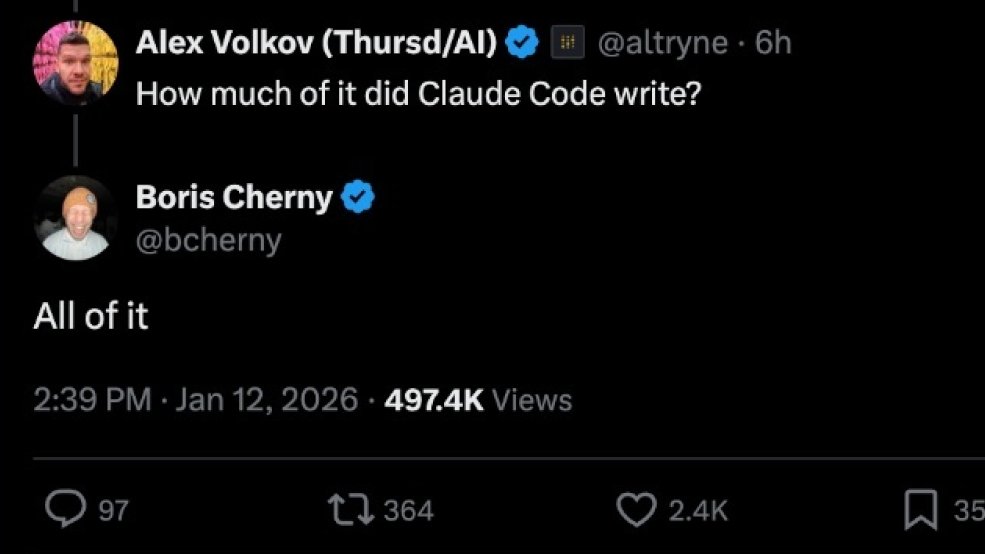

A few days ago, Anthropic shipped Cowork, a collaborative feature for Claude. Build time: a week and a half. Method: extensive use of AI code generation. When an Anthropic employee confirmed this, the reaction was immediate. Some celebrated the velocity. Others questioned what it meant for software engineering.

But the feature shipped. It works. And users don't care how it was written.

This is where we are now.

Every few decades, software engineering quietly changes what it values.

Not through a press release.

Not through consensus.

But through abstraction.

There was a time when "real programming" meant working in machine code. Then assembly. Then C. Then higher-level languages, frameworks, and libraries. Each transition sparked the same anxiety: we're losing understanding, we're making developers lazy, this isn't real engineering anymore.

And every time, the same thing happened.

The definition of being a good engineer shifted.

We didn't stop caring about correctness or performance. We stopped caring about how close the programmer was to the machine and started caring about how well the system served its purpose.

AI code generation is not a break from that pattern. It's the next step in it.

Abstraction Has Always Changed What "Good" Means

Writing assembly was once a mark of mastery. You controlled memory, registers, timing. You knew exactly what the machine would do because you told it, instruction by instruction.

But as compilers improved, that skill stopped being the bottleneck. The world didn't need more people who could optimize loops by hand. It needed people who could build systems, reason about complexity, and ship software that actually worked for humans.

So "good code" stopped meaning clever and started meaning clear.

In some ways, good code has always had a social definition. It's what earns respect from your peers, what demonstrates mastery within your community. In the 1970s, you proved yourself by making programs fit in impossibly small memory. In the 1990s, elegant object-oriented designs signaled sophistication. By the 2010s, the most respected code was the kind a junior engineer could understand.

The shift wasn't arbitrary. Each era's definition of "good" aligned with that era's bottleneck. When memory was tight, making things fit was a virtue. When developer time became the constraint, clarity became one. The code that solves the current bottleneck gets called "good."

AI code generation introduces a similar shift. When a machine can generate working code in seconds, the value is no longer in producing code. It's in deciding what code should exist at all, and what constraints it must obey.

What Changes in the AI Era

AI makes code abundant. Cheap. Replaceable.

Understanding, however, becomes scarcer.

In this environment, good code starts to mean something different.

Good code represents intent clearly

Not just what the system does, but why it exists. Code that can be explained succinctly is more valuable than code that is merely correct. When you read it, you should understand not just the implementation, but the constraints it respects and the tradeoffs it makes.

If an AI or another engineer can't safely modify it, the problem isn't the AI. It's the code.

Good code survives change

When code can be rewritten quickly, durability matters more than cleverness. Systems are judged not by how perfect they are today, but by how well they adapt tomorrow.

This mirrors the compiler transition. Once we trusted compilers, we optimized for maintainability, not instruction counts.

Good code encodes judgment

AI can generate implementations. It cannot decide what tradeoffs matter, what constraints are non-negotiable, what should not be built, or where failure is acceptable.

Those decisions live in architecture, interfaces, invariants, and tests. That's where human judgment now concentrates.

Good code requires competent verification

When AI generates implementations, engineers need enough literacy to evaluate correctness, spot security issues, and assess performance characteristics. This creates an interesting paradox: you need implementation knowledge to validate code you didn't write.

The skill isn't obsolete. It's repurposed. You're essentially doing code review on a very productive colleague who doesn't attend standups, doesn't know your system's constraints, and is confidently wrong about edge cases. You need to spot when generated code works in the demo but fails in production, introduces security holes, or makes assumptions your database can't support.

This skill builds through practice: reviewing AI-generated code with the same rigor you'd apply to a junior engineer's first PR. Ask: What edge cases did it miss? What assumptions did it bake in? What will break when the system scales? The engineers developing this muscle now will be the ones teams rely on.

Code as a Temporary Artifact

One uncomfortable implication of AI-assisted development is this:

Code is no longer precious.

It is closer to a build artifact than a craft object.

This is more true for some code than others. Application logic, glue code, routine CRUD operations are increasingly disposable. But foundational systems like databases, compilers, operating systems, and safety-critical software still reward deep craft and longevity. (Someone still has to write the thing that writes the thing.)

The shift isn't that all code becomes temporary. It's that more code does, and knowing which code deserves permanence becomes its own form of judgment.

For the growing category of disposable code, quality moves upstream into problem definition, system boundaries, feedback loops, and observability.

Good code is easy to change.

Bad code demands to stay exactly as it is.

This is not new. We already live in a world where frameworks are replaced every few years, languages rise and fall, and systems outlive their original implementations. AI simply accelerates the truth that was already there.

This shift can feel like devaluation. If you spent years mastering implementation, watching AI generate it in seconds stings. But the craft isn't diminished. It's redirected. The judgment that made you good at writing code is exactly what makes you good at evaluating it, architecting systems, and setting constraints. The expertise doesn't disappear. It compounds in a new direction.

The Familiar Fear, Repeating Again

Many of the critiques of AI-generated code sound familiar: "You don't really understand what's happening underneath." "This creates dependency." "It makes developers worse."

These were said about compilers. About garbage collection. About ORMs. About Stack Overflow. (That last one was probably fair.)

Each time, the fear wasn't irrational. It was just misplaced.

Understanding mattered as much as ever. What you needed to understand changed.

The engineers who thrived weren't the ones who clung to the old definition of skill. They were the ones who learned to operate at the new level of abstraction.

A New Working Definition

In the AI era, good code is not defined by how it was written.

Good code clearly expresses intent, encodes correct constraints, is easy to reason about, is safe to change, and enables leverage rather than demanding reverence.

The source of the code matters less than the decisions embedded in it.

What This Means in Practice

So what does this mean practically?

Invest time in understanding system architecture, not just implementation patterns. Learn to specify constraints precisely. AI will generate the code, but you define what correct means.

Practice reading code critically. Can you spot the security hole? The performance cliff? The assumption that breaks at scale?

And build judgment about when to use AI generation and when to write by hand. That discernment is the new skill.

We've Been Here Before

We didn't stop being engineers when we stopped writing assembly.

We became better ones.

AI doesn't remove the need for skill. It changes where skill lives. Away from keystrokes. Toward judgment. Toward systems thinking. Toward clarity of intent.

The engineers who matter most in this era won't be the fastest typists or the cleverest implementers.

They'll be the ones who know what to build, why it should exist, whether what was built actually matches the intent, and when it should disappear.

That has always been the real work.